Important Terminologies of ANNs

This section introduces you ro the various terminologies related with ANNs

Weights

In the architecrure of an ANN, each neuron is connected ro other neurons by means of directed communication

links, and each communication link is associated with weights. The weighrs contain information about e

if'!pur This information is used by the net ro solve a problem. The we1ghr can ented in

-rem1sOf matrix. T4e weight matrix can alSO bt c:rlled connectzon matrix. To form a mathematical notation, it

is assumed that there are "n" processing elemenrs in ann

where w; = [wil, w;2 •... , w;m]T, i = 1,2, ... , n, is the weight vector of processing dement and Wij is the

weight from processing element":" (source node) to processing element "j' (destination node).

If the weight matrix W contains all the adaptive elements of an ANN, then the set of aH W matrices

will determine dte set of all possible information processing configurations for this ANN. The ANN can be

realized by finding an appropriate matrix W Hence, the weights encode long-term memory (LTM) and rhe

activation states of neurons encode short-term memory (STM) in a neural network.

Bias

The hi · the necwork has its impact in calculating the net input. The bias is included by adding

a component .ro 1 to the input vector us, the input vector ecomes

X= (l,XJ, ... ,X;, ... ,Xn)

The bias is considered. like another weight, dtat is&£= b} Consider a simple network the net input to dte ourput neuron Yj is calculated as

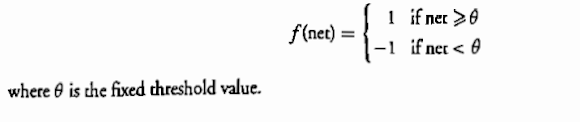

Threshold

a set value based upon which the final output of network may be calculated. The threshold

vafue is used in me activation function. X co.mparrso·n is made between the Cil:co.lared:·net>•input and the

threshold to obtain the ne ork outpuc. For each and every apPlicauon;·mere1S'a-dlle5hoid limit. Consider a

direct current DC) motor. If its maximum lhe threshold based on the speed is 1500

rpm. If lhe motor is run on a speed higher than its set motor coils. Similarly, in neural

networks, based on the threshold value, the activation functions ar-;;-cres.iie(l"al:td the ourp_uc is calculated. The

activation function using lhreshold can be defined as

Learning Rate

The learning rate is denoted by "a." It is used to ,co-9-uol the amounfofweighr adillStmegr ar each step of training The learning rate, ranging from 0 -to 1, 9'erer.ffi_iri.es the rate of learning at each time step.

Momentum Factor

Convergence is made faster if a momenrum factor is added to the weight updacion erocess. This is generally

done in the back propagation network. If momentum has to be used, the weights from one or more previous

uaining patterns must be saved. Momenru.nl helps the net in reasonably large we1ght adjustments until the

correct1ons are in lhe same general direction for several patterns.

Notations